AI – threat or opportunity in the work-force?

The sudden emergence of ChatGPT late last year has got everyone talking about artificial intelligence (AI). Has the technology reached a tipping point and finally crossed into the mainstream? What do business leaders need to know about AI? And what action should they be taking right now? To find out, we asked INNOPAY’s Vince Jansen, head of our Technology Innovation Services team, which views technology as the starting point for helping our customers to business drive value in the domains of digital identity, data sharing and payments.

What is the current situation with AI, and how will it shape the future?

Even though AI has been around for a long time – in fact, I did a course in it at university back in the late 1990s – the topic suddenly seems to have exploded onto the scene. Following decades of research and technological advancement, we have reached the point that there is now sufficient data available for training models and supporting the self-learning aspect of AI, and sufficient computational power to handle those huge datasets. As a result, tech companies are finally able to start doing AI at scale and I think things will accelerate from now on; we can expect to see a flood of new AI applications over the coming decade.

As to how it will shape the future, the resurgence of Microsoft – and its search engine Bing – is already causing a shift in the tech industry, posing a previously unthinkable threat to Google. We’ve seen similar dramatic, pivotal changes happen in the past, such as when Apple usurped Nokia in the smartphone era and when Amazon started renting out excess server space in the mid-2000s, triggering the move to the cloud. In my opinion, we are now witnessing another revolutionary moment in which OpenAI – an American AI lab and company – and other new or existing companies could emerge as the new tech giants in the coming years. And this tech shift will undoubtedly cause waves in the global economy, resonating in other industries and impacting on many areas of society and life in general. But I think it’s impossible for anyone to predict the full impact with any great certainty at this stage!

"Sometimes, AI is a very convincing nonsense generator!"

What are common uses and applications for AI in the transactional domain, and where will it gain traction first?

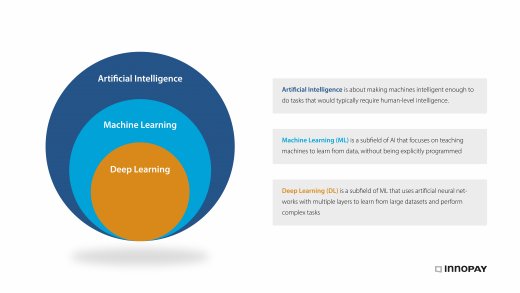

The field of AI is diverse and vast, so before zooming in on the transactional domain, I think it’s good to look at AI in general and the trends that caused the recent rise in attention on this topic.

Although perhaps unknown to the broader public, there are many examples of where AI is already supporting or replacing human tasks. In the medical space, for instance, algorithms are being developed that can diagnose diseases and design treatment plans more efficiently and effectively than current methods. AI is also often present in highly automated and/or robotised environments such as e-fulfilment warehouses and international ports. And most of us are familiar with cars that contain driving support systems nowadays. However, AI and machine-learning use cases in these application domains are typically fairly narrow and they have been optimised for single tasks.

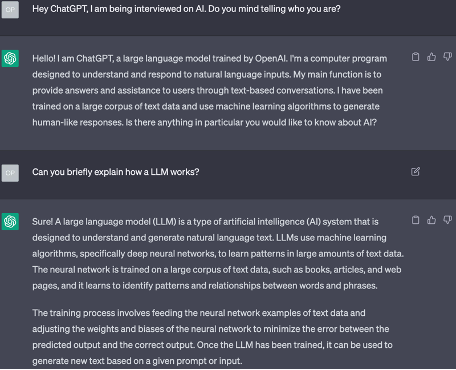

The big shift we are seeing now is in deep learning, with the rise of generative AI, such as the MidJourney text-to-image tool, and Large Language Models (LLMs). ChatGPT – running on OpenAI’s GPT model – is currently the most high-profile example of an LLM, but there are many others. So, suddenly, we’re seeing computers being creative – something we thought of as a human trait. And their speed is impressive, spitting out works of art in seconds, whereas human content creators would often need to spend hours on a single piece of work. These advanced ‘deep learning’ models have been trained on massive datasets. In response to a text prompt or an example from a user, they simply draw on all the information that has been input during the training phase. They then combine that with their ability to understand context and predict desired outcomes – such as human conversations in the case of LLMs – to generate new text, images, videos, audio or 3D models. In other words, the essence of AI revolves around computer models that are able to analyse huge volumes of data in order to make increasingly accurate predictions.

In terms of how this will impact the transactional domain, AI could add value in any kind of data-driven environment where predictions are required. In the financial sector, for example, there is huge potential in anti-money laundering (AML) and counter-terrorist financing (CTF) regulations – where AI can look for patterns and automate process steps – or to utilise customer data to predict, and subsequently improve, the success of marketing efforts for retail products. On a broader level, AI could make a key difference across various domains linked to all kinds of societal challenges, from farming – by contributing to crop monitoring, predicting soil quality and optimising water management – to the energy transition. Nowadays, as more consumers own solar panels and electric cars that can deliver energy back to the grid, the energy market is evolving into a two-sided market with a dynamic pricing structure. AI-based predictions and analysis have the potential to transform this market by creating the conditions for a decentralised ecosystem of consumers and energy grids. For example, I can imagine energy providers developing a whole new set of services, such as AI-driven trading tools or apps based on peering mechanisms that help consumers to monitor real-time market prices and adjust their energy consumption accordingly. Over time, this could even become controlled autonomously, eliminating the human role in such transactions.

If AI means that previously human-based transactions will become increasingly autonomous, how will professionals be affected?

For many professionals, the underlying thought is ‘Will AI put me out of a job? And if so, what do I need to do to prepare for that?’. To provide a short answer: AI won’t take your job, but another person using AI might. We already know that AI can work faster and more accurately than people for various tasks, so there will definitely be some jobs – including some highly skilled roles – that will no longer need to be performed by humans. However, for AI to achieve the desired level of quality, the models must be trained – and for that, you need domain experts. Therefore, rather than becoming totally obsolete, many professionals are more likely to find that their job description changes. People’s roles could become more about analysing and refining the output of an AI model based on their own knowledge and skills, or about using their own critical thinking to draw conclusions and make decisions based on AI-generated insights. Because that’s an aspect people may forget: these models do get it wrong, but the mistakes may be hard to spot. Sometimes, AI is a very convincing nonsense generator!

Needless to say, many people are averse to change and some will struggle to accept or adjust to the new way of working, in which case they will probably want to look for a different kind of job. However, I believe there will be plenty of opportunities for professionals who embrace AI as a tool for efficiency and effectiveness and are willing to put their skills to good use to contribute to its continued enhancement.

Finally, I believe that – as a society – there is a choice to make. What will we do with the freed-up capacity in the workforce? Perhaps this is me being over-optimistic, but I hope this will lead to a society with enough people to work on the energy transition, or in human-centric healthcare, or to be artists.

"AI won't take your job, but another person using AI might."

Besides potential job losses, what are the other key concerns related to AI?

Obviously, there is some unease about what kind of ‘superpower’ we have unleashed. Such concerns were expressed recently in the letter about AI from over 1,500 tech researchers, professors and developers, including the likes of Elon Musk and Steve Wozniak, in which they described AI as posing “profound risks to society and humanity”. However, they went on to write that AI can offer humanity a “flourishing future,” providing that it is well managed, and I think that’s the key issue here. Even if all governments somehow managed to agree to pause the developmental work on AI, that would be unenforceable. So, I think it’s too late for that now – the genie is out of the bottle. Instead, we need to focus on managing the associated concerns. A lot of the apprehension comes from not truly understanding AI; the fact that today’s models can imitate near-human behaviour is very impressive, but ultimately they are just prediction models based on huge volumes of data – there’s no ‘magic’ involved and they are not sentient. But there is of course the potential to develop powerful applications that could be used or abused for economic or geopolitical influence, so some fears are certainly justified.

Besides this, AI raises a number of ethical issues. One issue relates to the fact that AI deep learning models are already becoming so good at generating creative content that the volume of AI-generated content is likely to increase dramatically. But what will this mean for quality and authenticity? How will we be able to know or check what’s fake and what isn’t – and will we even care? Another big concern is that it’s currently unclear how the AI models obtain the data they use, so this presents issues related to intellectual property rights and data sovereignty, i.e. the right to control your own data. After all, there’s currently no way for people or organisations to give – or withhold – their consent for their data to be used for AI purposes. Besides that, various real-life examples have made it clear that bias can seep into AI models, resulting in unfair outcomes for certain groups, so this is definitely an important area that requires attention.

Personally, I am happy to be in the EU, where we have set a track record in principle-based, human-centric laws that protect individuals, such as GDPR. Currently, a similar AI Act is in the making. And in my view, another concern that hasn’t received much coverage so far is the sheer amount of electricity required to power all the servers. How does this huge energy footprint fit in with society’s broader efforts to save the planet, and what can be done about it?

What should organisations be doing to benefit from the opportunities of AI?

The first step is to accept that we’ve entered the era of AI; it’s definitely here to stay. Now it’s time to take an interest in what it can do and learn how to use it to your advantage. That starts with the right organisational culture and mindset, with clear support from the top down. I actually see a lot of parallels with the emergence of the data economy and ‘everything transaction’ thinking over the past decade, in the sense that businesses must be capable of change or risk getting left behind. An AI roadmap can play a valuable role, and the process is pretty similar to developing an API strategy, for example. Importantly, rather than being merely a ‘tech project’, AI should be approached as an opportunity to create new value for your business. This should ideally be supported by a multidisciplinary team – including external domain experts, if necessary – for exploring the opportunities of AI within the organisation’s own context: a kind of ‘centre of excellence’ for AI. can definitely help to enlist.

The key question is how you can use these data-driven, predictive building blocks to solve problems: either by developing new products and services for your customers, or by improving the effectiveness and efficiency of your operations. Once this has been established, the team can outline a vision and direction, then focus on getting others on board, educating them and leading the way. Within this process, it’s important to recognise and address any associated concerns and pitfalls such as the risk of bias and the need for transparency. At least some of the 'big techs' have already appointed ethical commissions to monitor this. Also, despite the strong sense of urgency and the need to make a start on AI, it’s important to bear in mind that the road to maturity will be a long one. Building an effective dataset and training an AI model tailored to your specific needs is not a one-time task; it’s a complex, reiterative process of reinforced learning and fine-tuning that benefits from an agile approach. However, we’re already seeing some pre-trained models emerging and eventually the technology will probably become more of a commodity – a kind of true ‘open AI’ – so that organisations can focus on how to use ready-made building blocks to solve their own problems. While this will make it easier, it will still be a lot more challenging than building a website or an app.

We also need to remember that, for AI models to be a success, you need keep feeding them with relevant data. This means it will become even more essential to get all kinds of organisations to collaborate and share data with one another safely, securely and conveniently. Therefore, topics such as data sovereignty and ecosystem thinking will continue to gain in importance. That’s why I believe that, when it comes to tapping into AI’s potential for good, organisations that are already embracing the opportunities of data sharing and open APIs will have an advantage over those who have so far been lagging behind. Personally, I’m very excited about helping organisations to explore this uncharted territory so that this technology can become an enabler of their strategic goals.